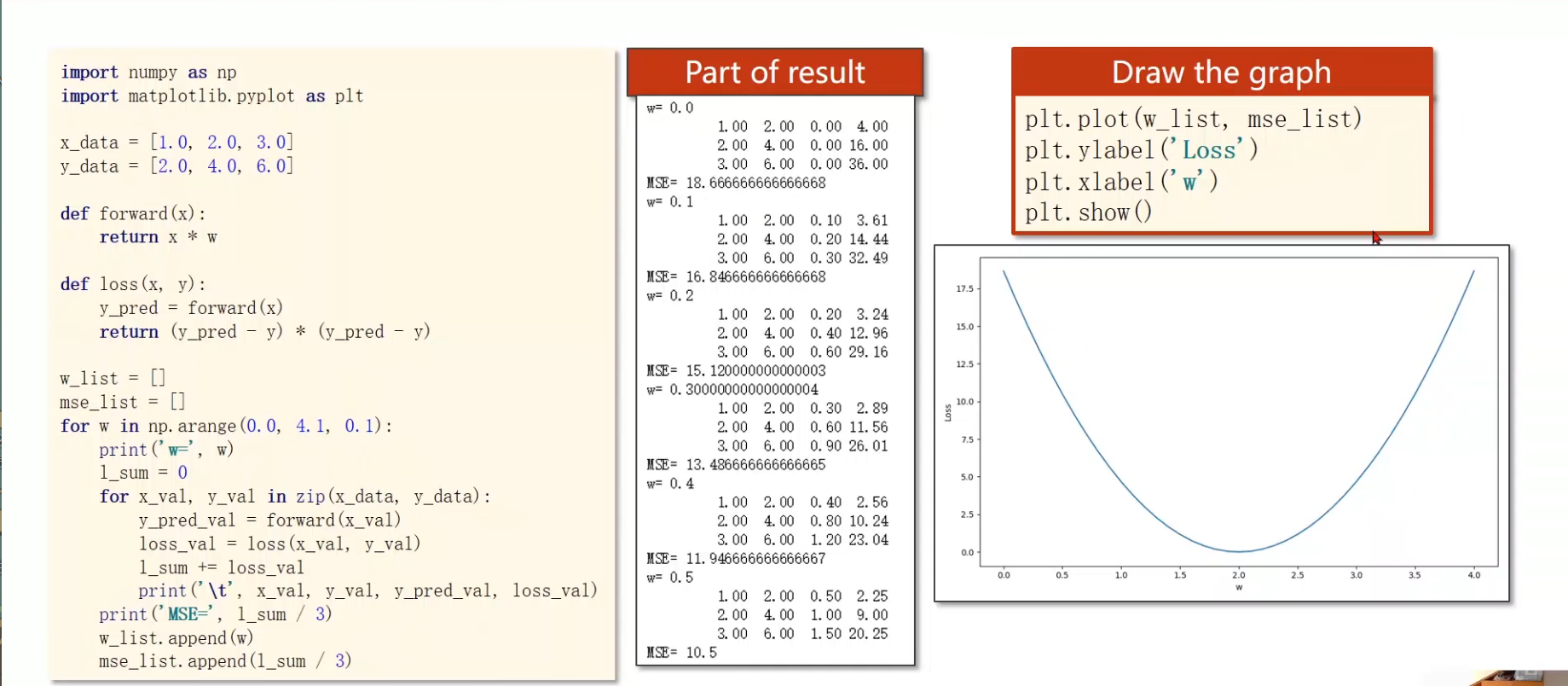

From Linear Regression: Exhaustive Search

Prepare the train set.

Define the model: y h a t = x ∗ w y_{hat} = x * w y ha t = x ∗ w

Define the loss funciton: l o s s = ( y h a t − y ) 2 = ( x ∗ w − y ) 2 loss = (y_{hat} - y)^2 = (x * w - y)^2 l oss = ( y ha t − y ) 2 = ( x ∗ w − y ) 2

List w_list save the weights ω \omega ω mse_list save the cost values of each ω \omega ω .

Compute cost value at (0.0,4.0,0.1)

Value of cost function is the sum of loss function

Find the min_loss.

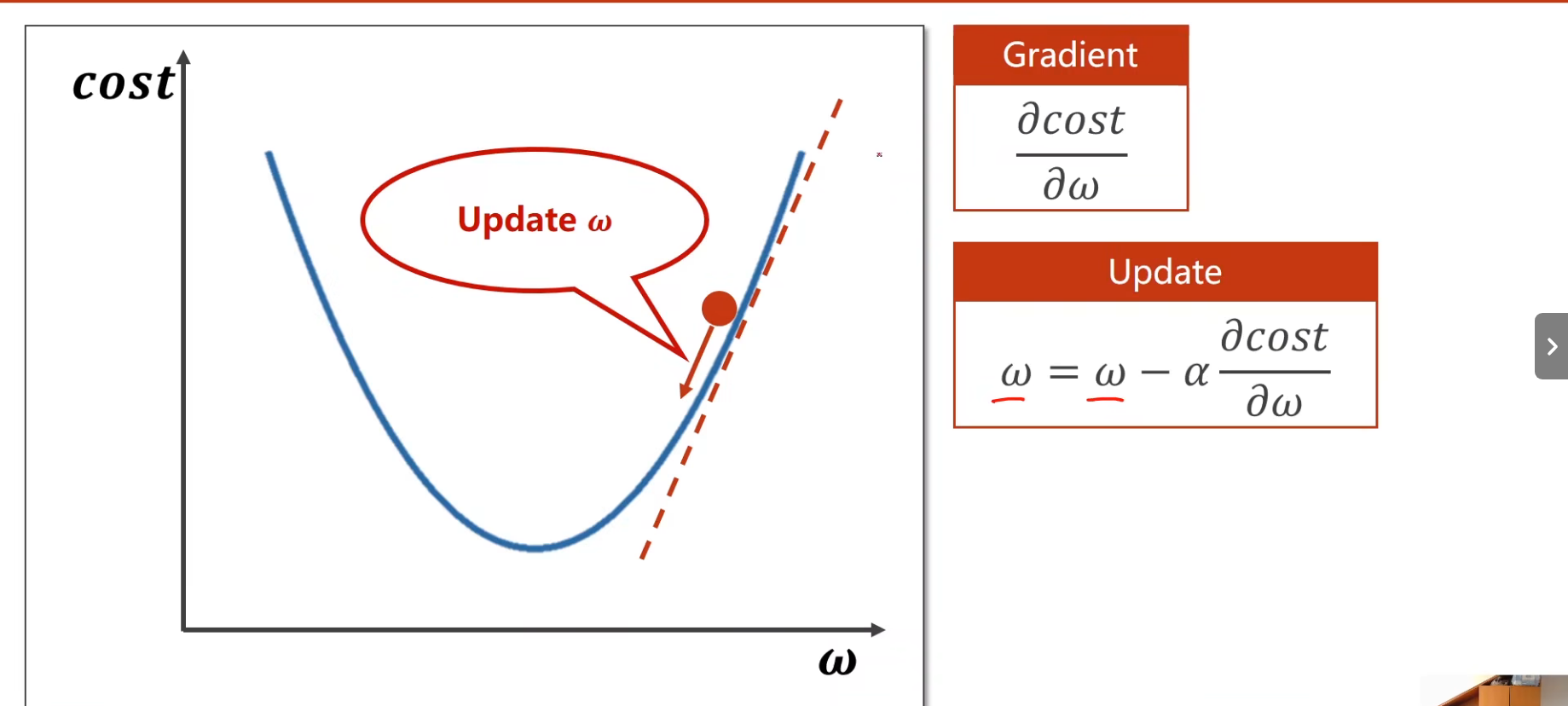

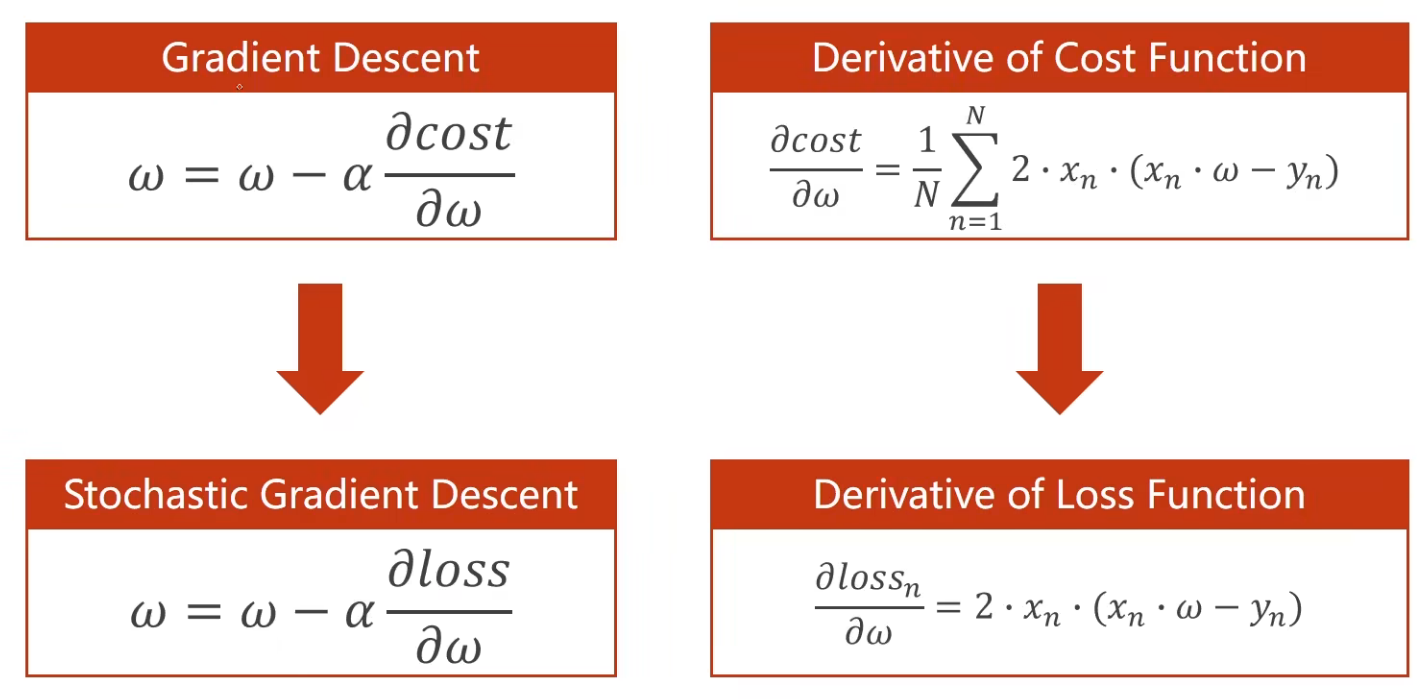

Gradient Descent(梯度下降算法)

α \alpha α

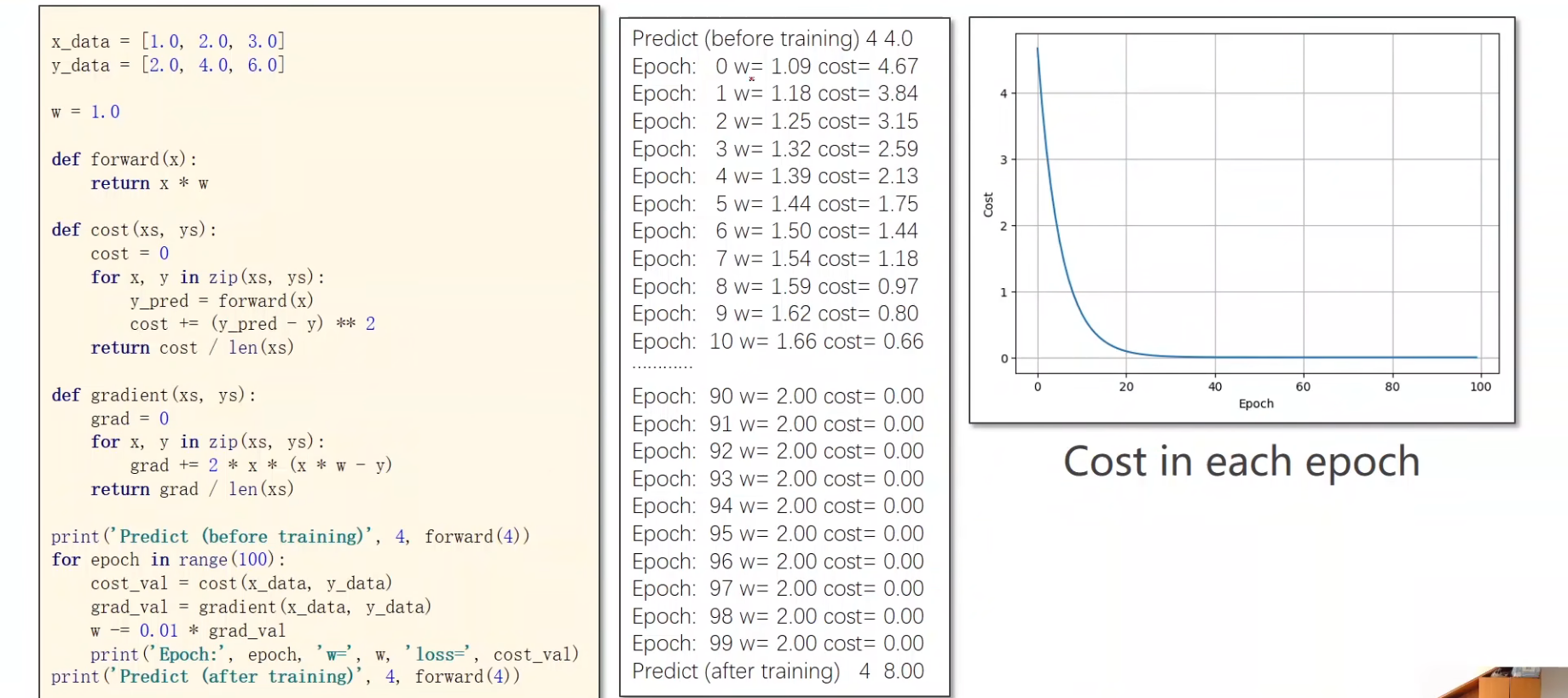

Use y = x ∗ w y = x * w y = x ∗ w

code and result:

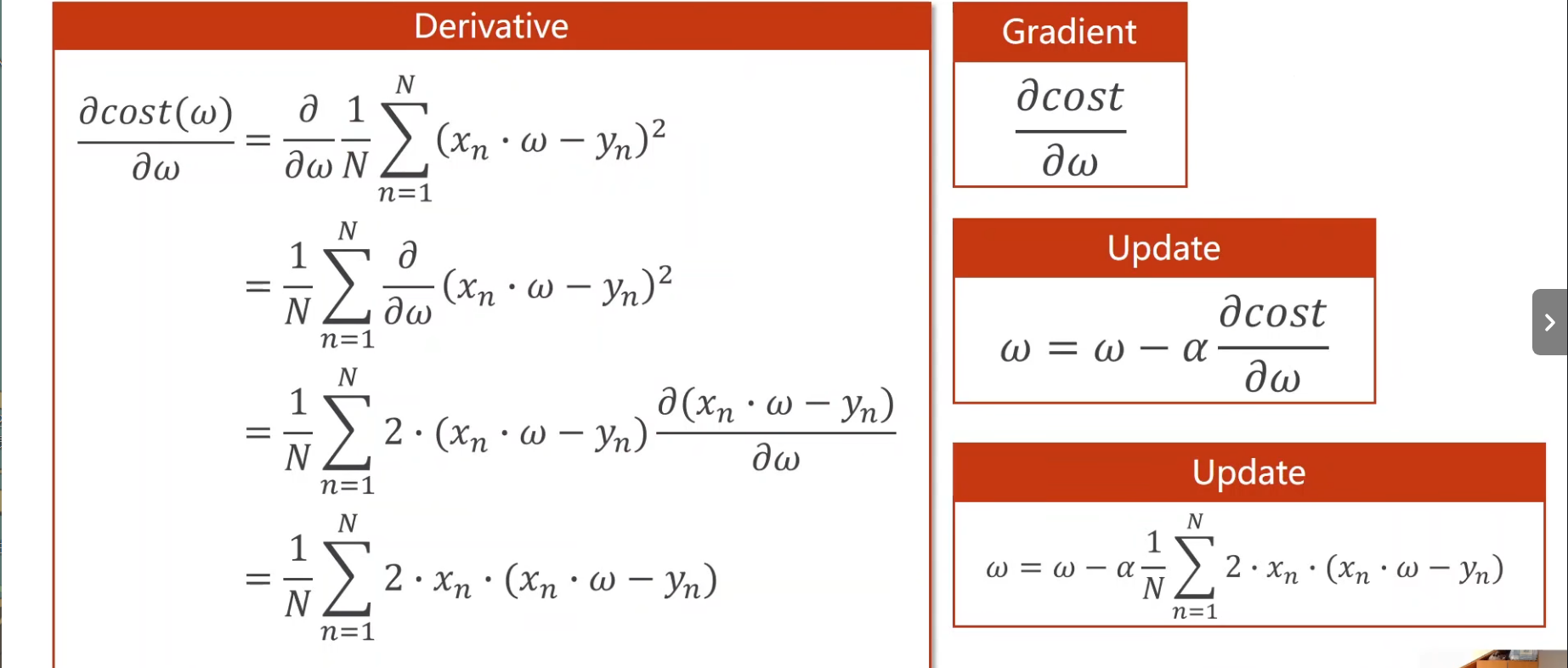

Define the cost function:c o s t = ∑ i = 1 n ( y h a t − y ) 2 cost = \sum_{i=1}^n (y_{hat} - y)^2 cos t = ∑ i = 1 n ( y ha t − y ) 2

Define the gardient function:∂ c o s t ∂ ω = 1 N ∑ n = 1 N 2 ⋅ x n ⋅ ( x n ⋅ ω − y n ) \frac{\partial cost}{\partial \omega} = \frac{1}{N} \sum_{n=1}^{N} 2 \cdot x_n \cdot (x_n \cdot \omega - y_n)

∂ ω ∂ cos t = N 1 n = 1 ∑ N 2 ⋅ x n ⋅ ( x n ⋅ ω − y n )

Do the update:ω = ω − α ∂ c o s t ∂ ω \omega = \omega - \alpha\frac{\partial cost}{\partial \omega}

ω = ω − α ∂ ω ∂ cos t

Use Stochastic Gradient Descent (随机梯度下降) to replace the normal: escape hte Saddle Points (but high Time Complexity)

Use Batch or Mini-Batch (批量梯度下降) to balance Time Complexity and Correct Rate.

Back Propagation(反向传播)

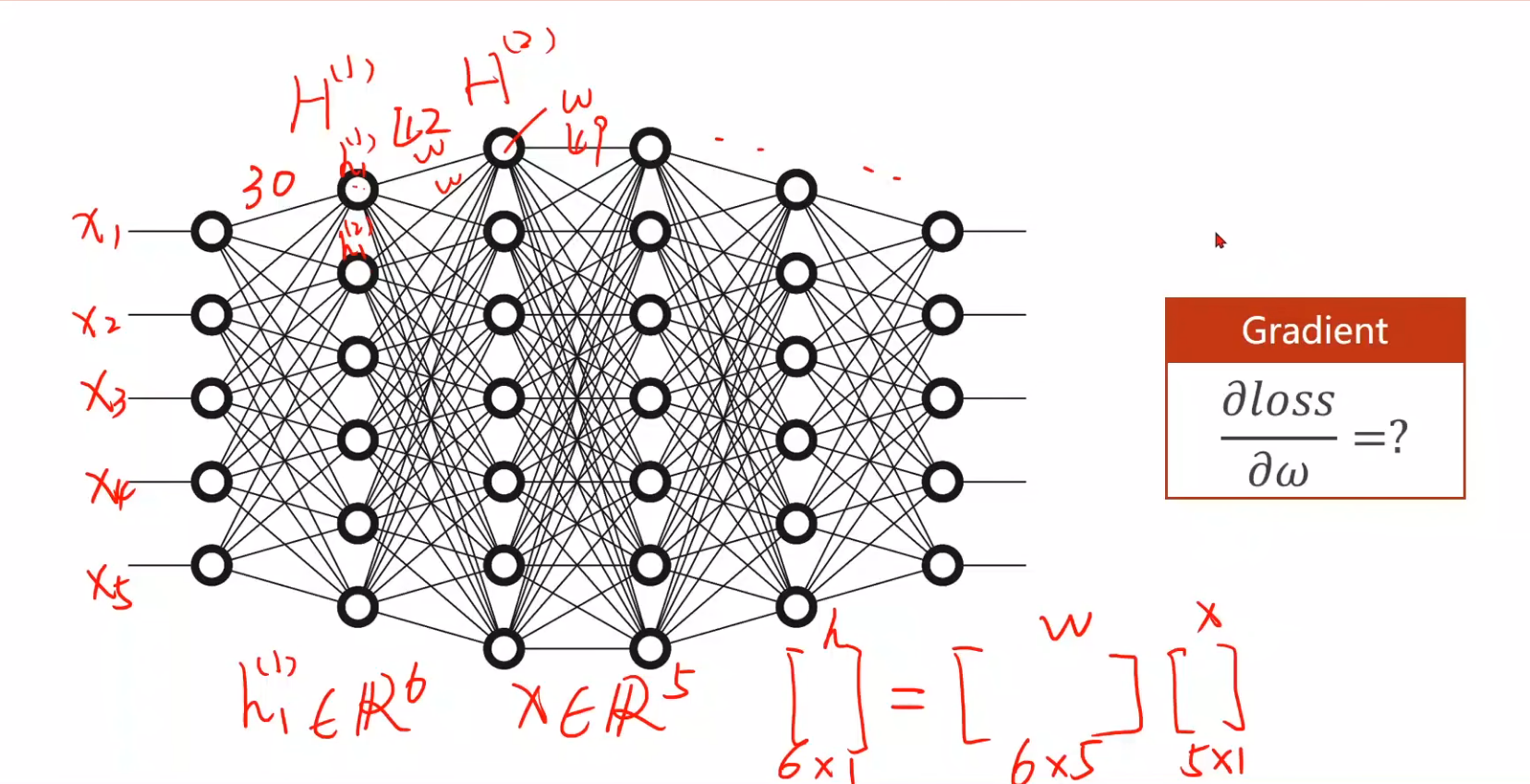

Neural Networks

Complicated net work:

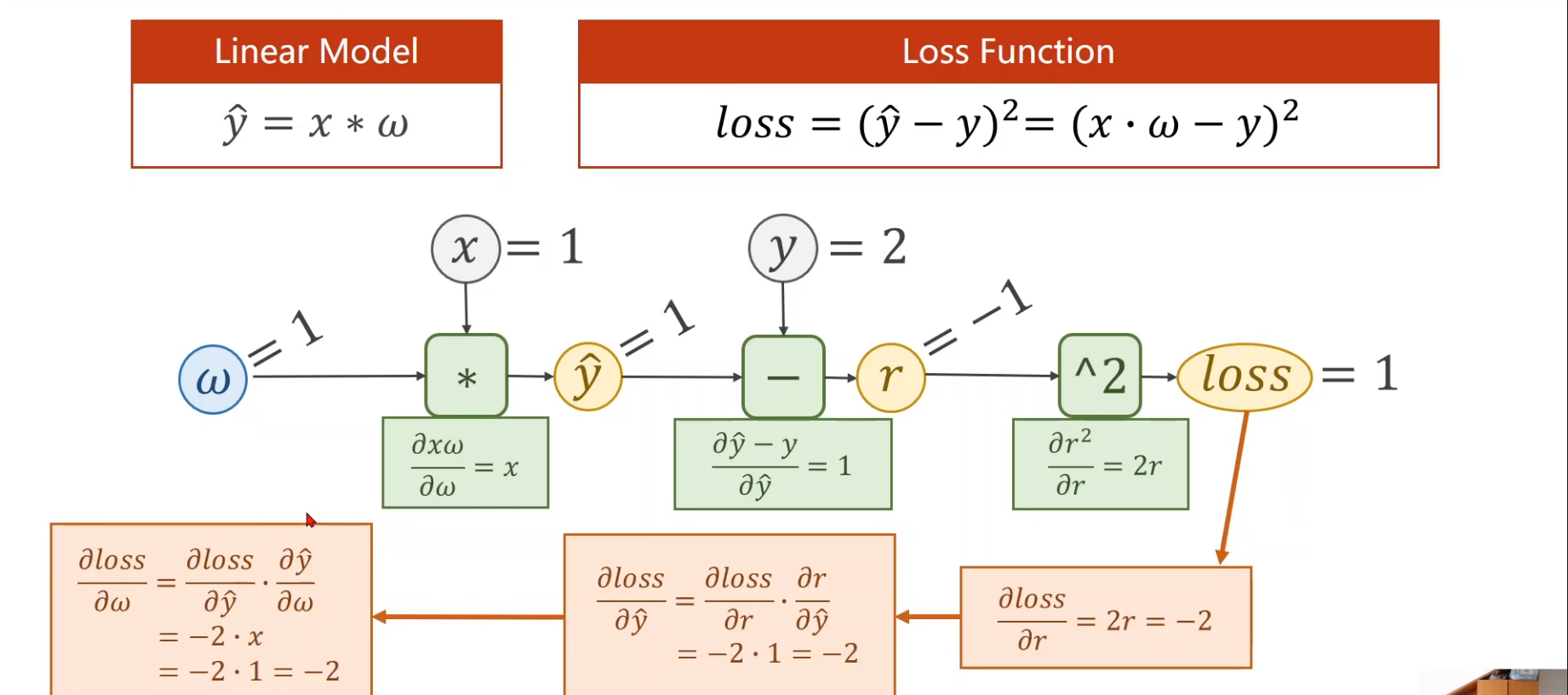

Still a simple example:

Derivative

Nonlinear Function(激活函数):Introduce non-linearity into neural networks:

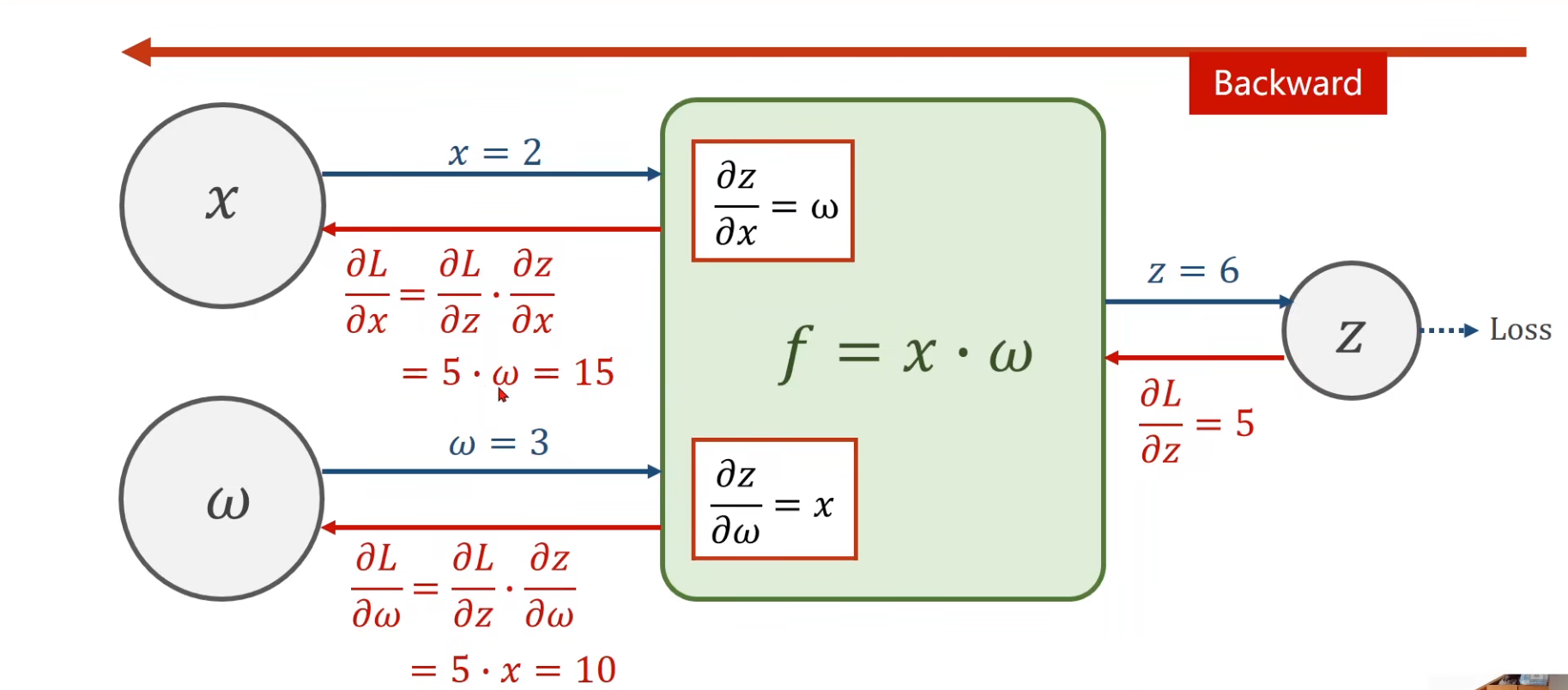

Backward

Actually:Derivative’s Chain Rules

Still a simple example:

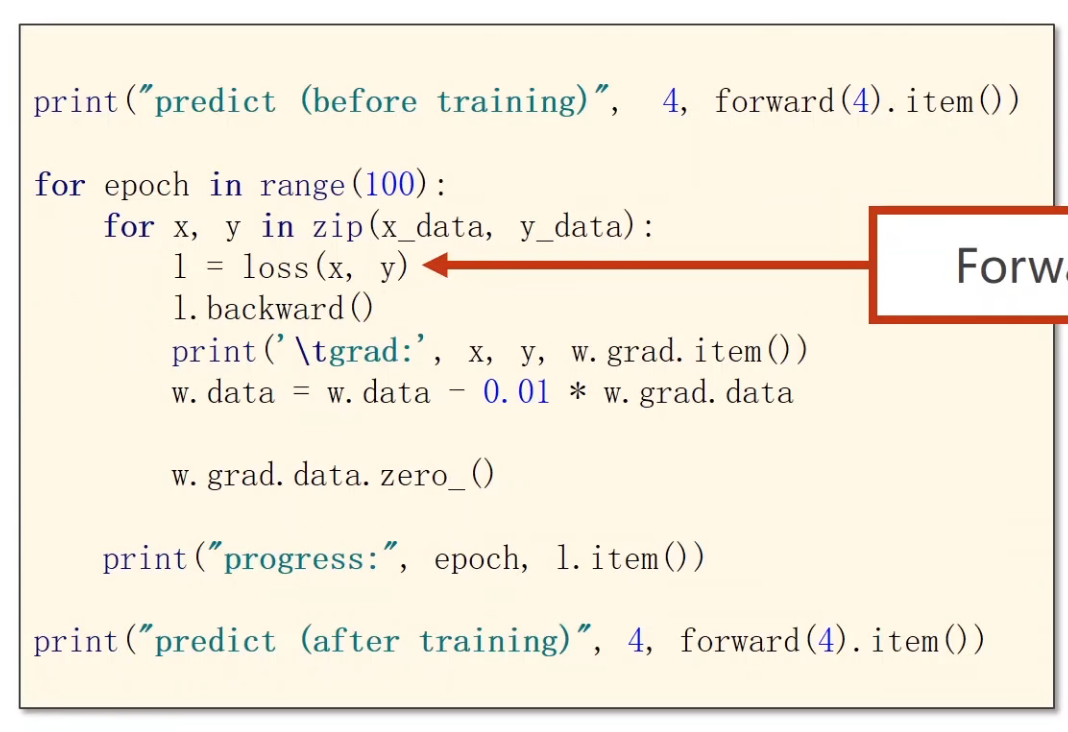

in PyTorch

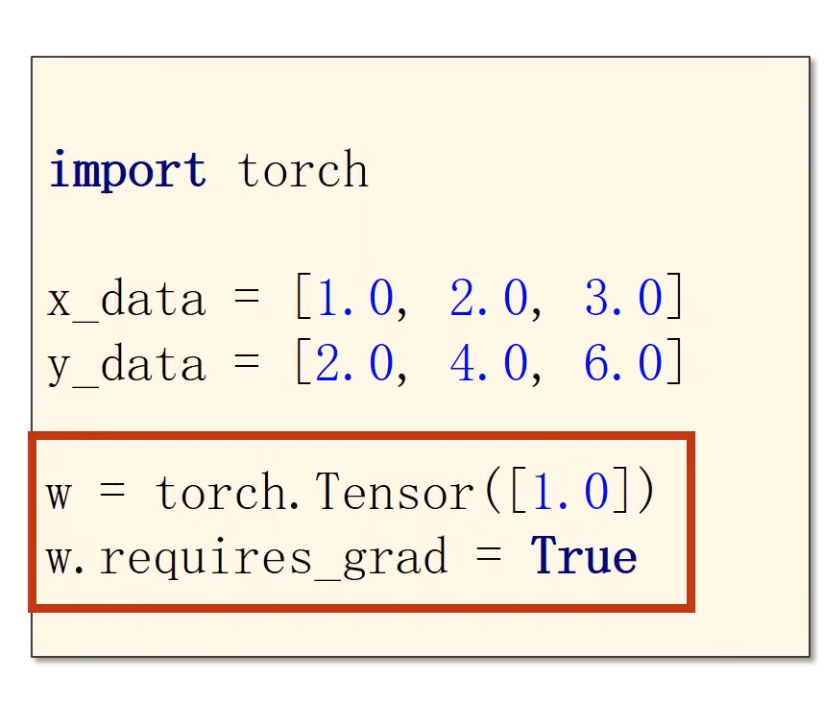

In PyTorch, Tensor is the important component in constructing dynamic computational graph.

It contains Data and Grad, which storage the value of node and gradient w.r.t loss respectively.

If autograd mechanics are required, the element variable requires_grad of Tensor has to be True

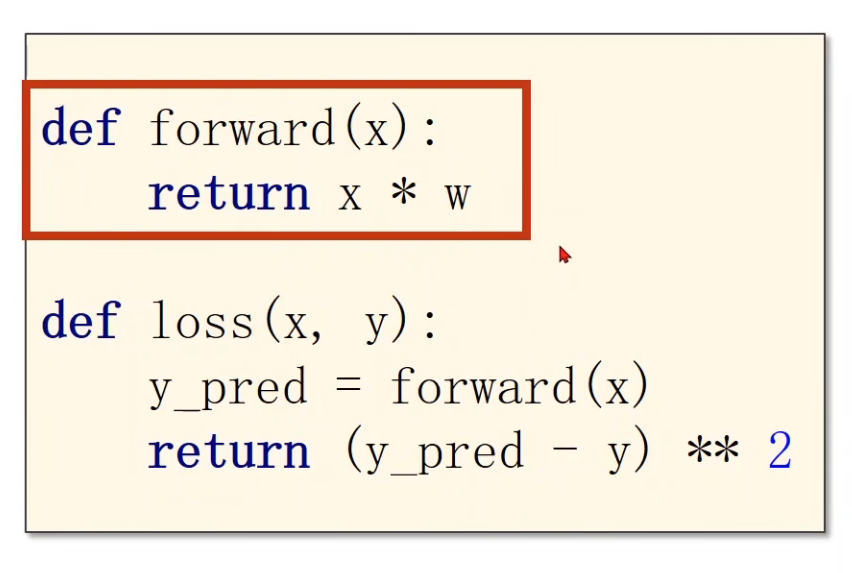

Model:

Define the linear model and the loss function.

Forward, compute the loss.

Backward, compute the grad for Tensor whose requires_grad set to True.

The grad is utilized to update weight.

NOTICE:

The grad computed by .backward() will be accumulated.

So after update, remember set the grad to ZERO !

Linear Regression with PyTorch

Prepare dataset

Design model using Class: inherit form nn.Module

Construct loss and optimizer: using PyTorch API

Training cycle: forward, backward, update

Code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 import torchx_data = torch.Tensor([[1.0 ],[2.0 ],[3.0 ]]) y_data = torch.Tensor([[2.0 ],[4.0 ],[6.0 ]]) class LinearModel (torch.nn.Module): def __init__ (self ): super (LinearModel,self ).__init__() self .linear = torch.nn.Linear(1 ,1 ) def forward (self,x ): y_pred = self .linear(x) return y_pred model = LinearModel() criterion = torch.nn.MSELoss(size_average=False ,reduce=True ) optimizer = torch.optim.SGD(model.parameters(),lr=0.01 ) for epoch in range (1000 ): y_pred = model(x_data) loss = criterion(y_pred,y_data) print (epoch,loss.item()) optimizer.zero_grad() loss.backward() optimizer.step() print ('w = ' ,model.linear.weight.item())print ('b = ' ,model.linear.bias.item())x_test = torch.Tensor([[4.0 ]]) y_test = model(x_test) print ('y_pred = ' ,y_test.data)

optimizer in PyTorch:

torch.optim.Adagrad

torch.optim.Adam

torch.optim.Adamax

torch.optim.ASGD

torch.optim.LBFGS

torch.optim.RMSprop

torch.optim.Rprop

torch.optim.SGD

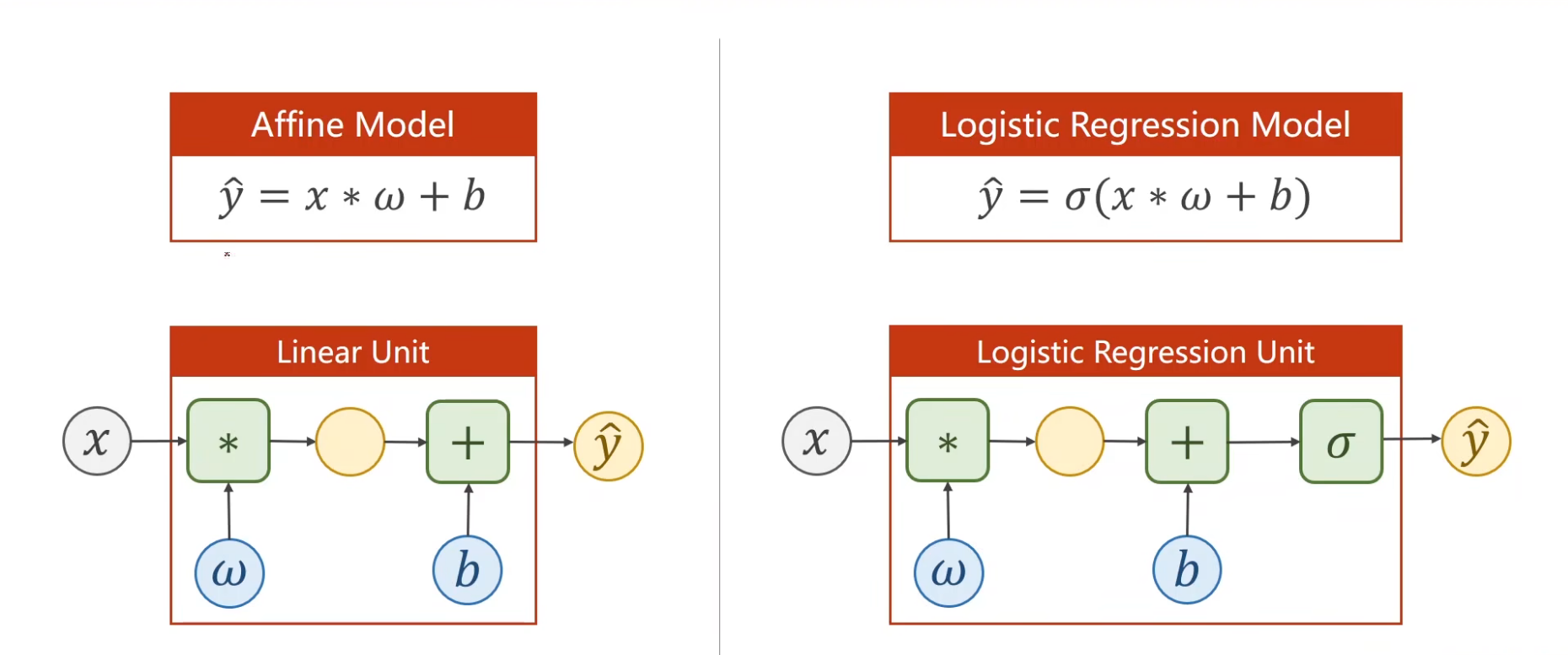

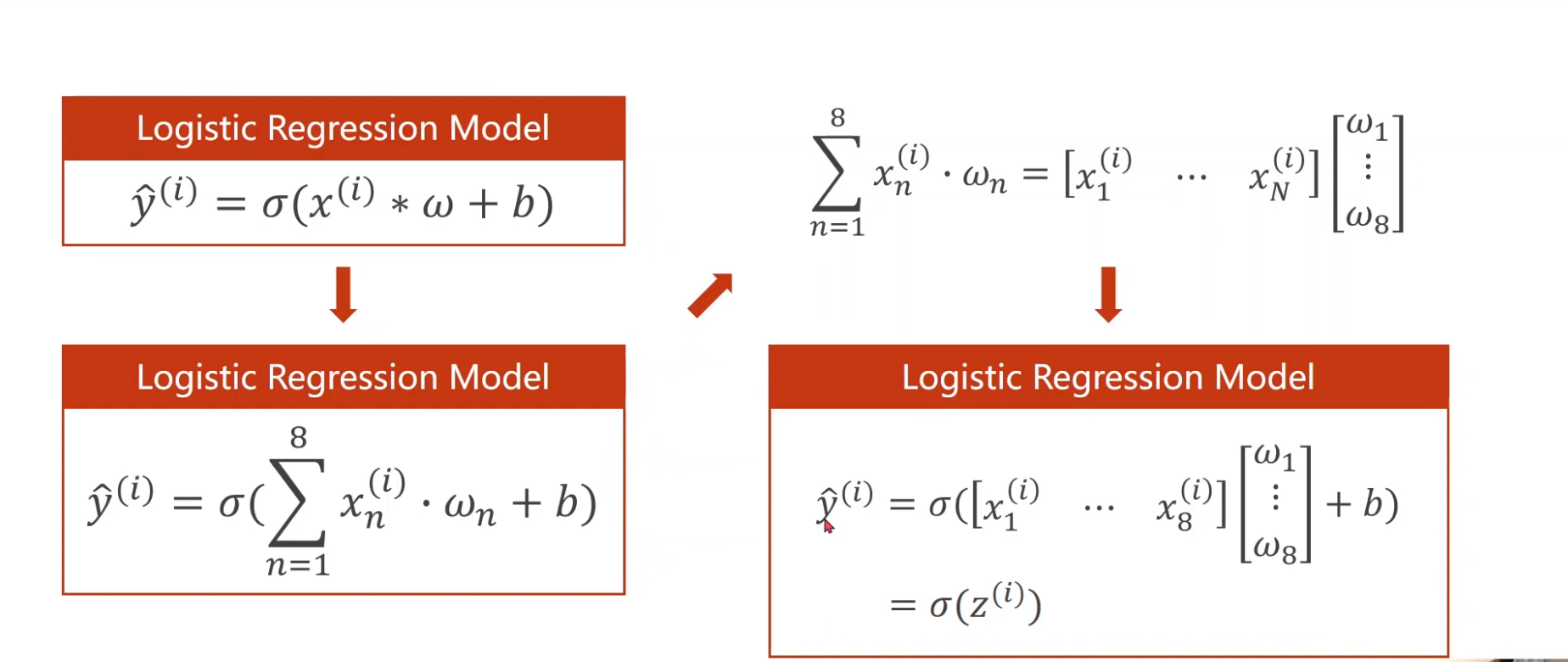

Logistic Regression

For classification(Yes/No).

torchvision:

1 2 3 import torchvisiontrain_set = torchvision.datasets.MNIST(root='../dataset.mnist' ,train=True ,download=True ) test_set = torchvision.datasets.MNIST(root='../dataset.mnist' ,train=False ,download=True )

example library:

MNIST/Fashion-MNIST

CIFAR-10/CIFAR-100

ImageNet

COCO

σ ( x ) = 1 1 + e − x \sigma(x) = \frac{1}{1+e^{-x}}

σ ( x ) = 1 + e − x 1

Loss function and optimizer:

l o s s = J ( θ ) = − 1 N ∑ [ y log ( y ˆ ) + ( 1 − y ) log ( 1 − y ˆ ) ] loss = J(\theta)= -\frac{1}{N}\sum[y\log(\^y)+(1-y)\log(1-\^y)]

l oss = J ( θ ) = − N 1 ∑ [ y log ( y ˆ ) + ( 1 − y ) log ( 1 − y ˆ )]

BCEloss (交叉熵):

if y = 1 y=1 y = 1 y ˆ \^y y ˆ

if y = 0 y=0 y = 0 y ˆ \^y y ˆ

Code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 import torchimport numpy as npimport matplotlib.pyplot as pltx_data = torch.Tensor([[1.0 ],[2.0 ],[3.0 ]]) y_data = torch.Tensor([[0 ],[0 ],[1 ]]) class LogisticRegressionModel (torch.nn.Module): def __init__ (self ): super (LogisticRegressionModel,self ).__init__() self .linear = torch.nn.Linear(1 ,1 ) def forward (self,x ): y_pred = F.sigmoid(self .linear(x)) return y_pred model = LogisticRegressionModel() criterion = torch.nn.BCELoss(size_average=False ,reduce=True ) optimizer = torch.optim.SGD(model.parameters(),lr=0.01 ) for epoch in range (1000 ): y_pred = model(x_data) loss = criterion(y_pred,y_data) print (epoch,loss.item()) optimizer.zero_grad() loss.backward() optimizer.step() x = np.linspace(0 ,10 ,200 ) x_t = torch.Tensor(x).view((200 ,1 )) y_t = model(x_t) y = y_t.data.numpy() plt.plot(x,y) plt.plot([0 ,10 ],[0.5 ,0.5 ],c='r' ) plt.xlabel('Hours' ) plt.ylabel('Probability of Pass' ) plt.grid() plt.show()

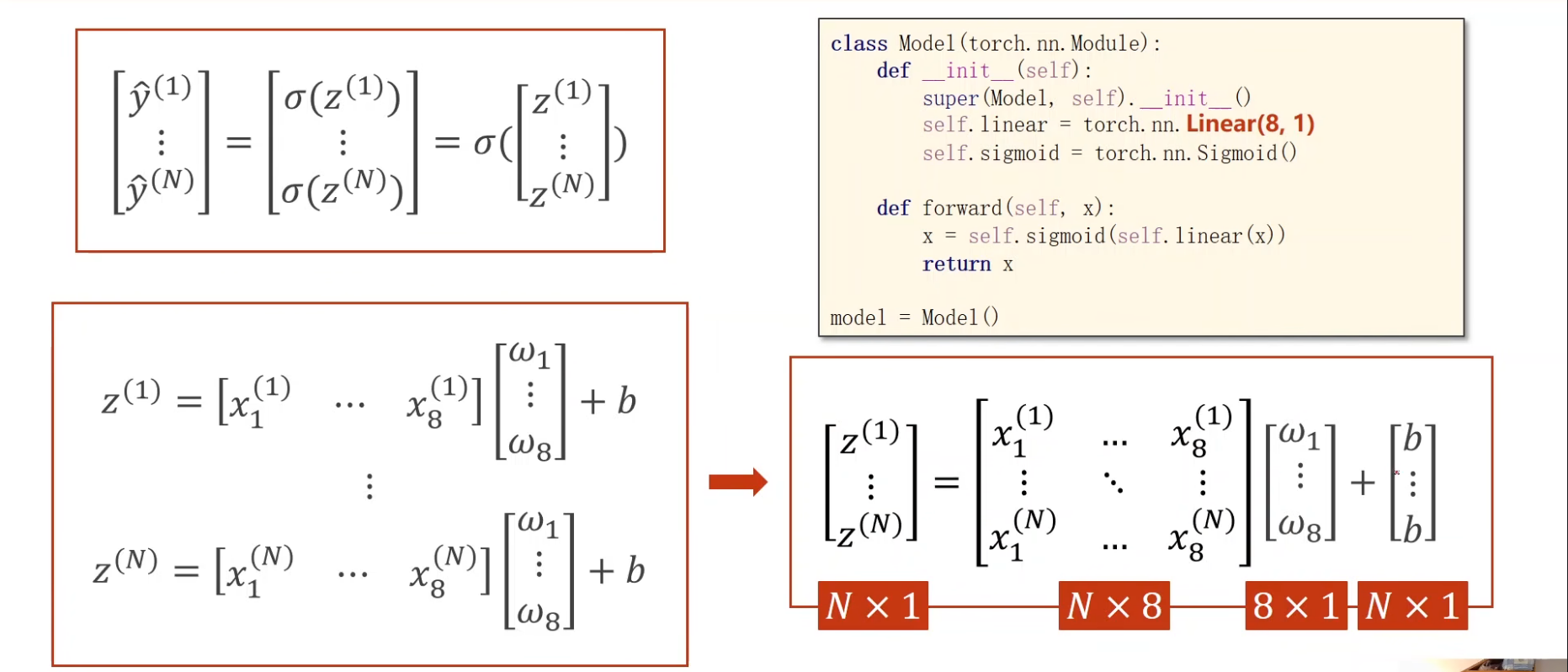

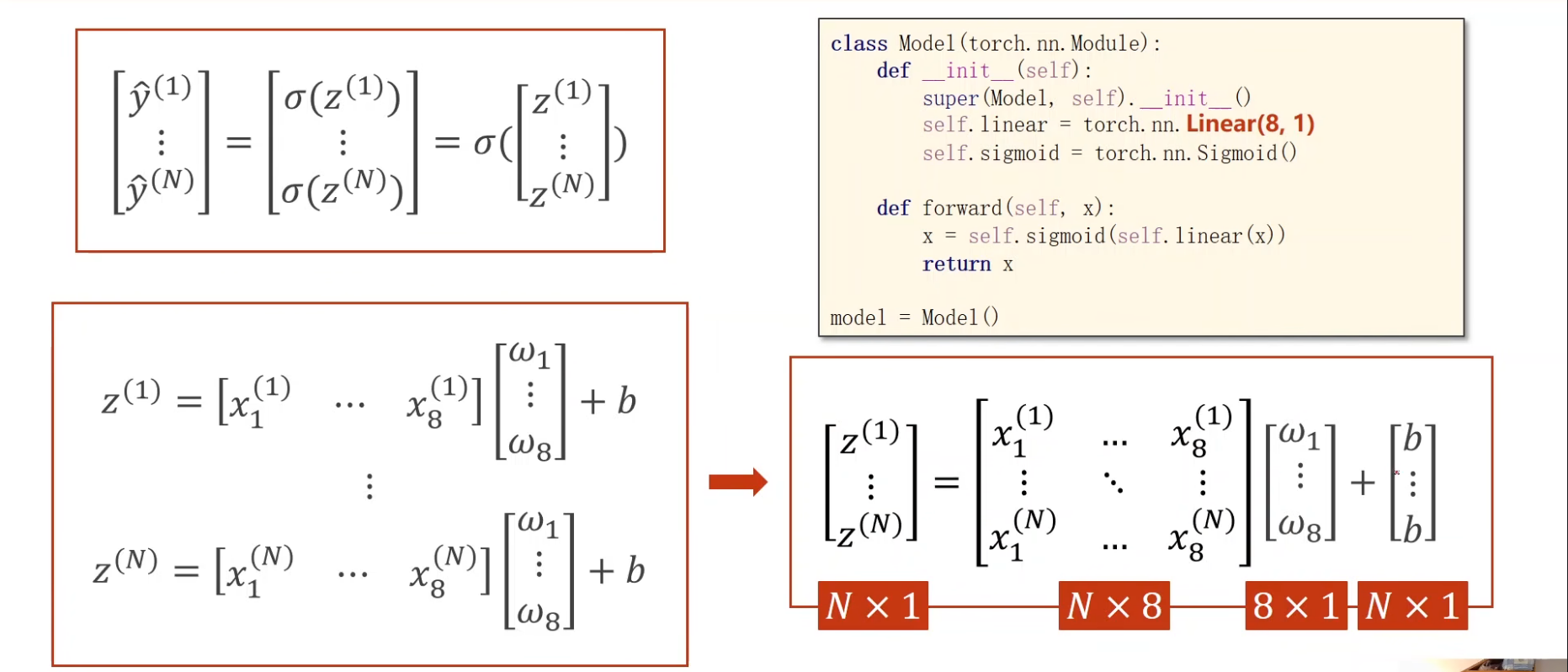

Multiple Dimension

A variable is called a Feature .

8-tensor as an example.

change dimension:

1 2 3 4 5 6 7 8 9 10 11 12 class Model (torch.nn.Module): def __init__ (self ): super (Model.self ).__init__() self .linear1 = torch.nn.Linear(8 ,6 ) self .linear2 = torch.nn.Linear(6 ,2 ) self .linear3 = torch.nn.Linear(2 ,1 ) self .sigmoid= torch.nn.Sigmoid() def forward (self,x ): x = self .sigmoid(self .linear1(x)) x = self .sigmoid(self .linear2(x)) x = self .sigmoid(self .linear3(x))

![[炽吾生平]初雪记](/img/cover_xue.jpg)